As product managers and leaders, we constantly face high-stakes decisions that can make or break our products and businesses. Should we invest in feature X or Y? How should we prioritize our backlog? Which customer segments should we focus on? The sheer volume and complexity of these choices can be overwhelming.

In an ideal world, we’d have perfect information and infinite resources to thoroughly explore every option before committing. But in reality, we’re often operating in an uncertain environment with limited data, tight deadlines, and competing priorities. The pressure to ship quickly and hit our targets can lead to shortcuts and gut-based decision-making.

We’ve all been there—the exec swoops in with a pet project that suddenly jumps to the top of the roadmap. The loudest voice in the room dominates the feature debate. The team gets stuck in analysis paralysis, endlessly debating options without a clear way to evaluate them. Conversely, we rush into build mode without validating our assumptions, only to discover major flaws after launch.

These common pitfalls can be costly on multiple levels. Misaligned features can tank engagement and revenue. Constant thrash and redirects burn out the team. Lack of customer understanding leads to building the wrong things. In today’s globalized market, a narrow perspective can cause you to miss massive growth opportunities.

But it doesn’t have to be this way. Adopting a defined approach to product decision-making (where data and evidence rule) can increase our chances of success, even in the face of uncertainty. It’s about intentionally setting goals, evaluating ideas, incorporating diverse inputs, and leveraging lightweight experiments to test our riskiest assumptions before going all-in.

Over the past decade, I’ve seen the power of this approach firsthand from various product leaders who have collaborated with us and spoken at our events at Product Collective – and shared what’s worked best for their teams over the years. I’ve watched teams go from spinning their wheels to confidently shipping game-changing features. In this essay, I’ll share some of the most impactful mindsets and tactics you can apply today to improve your product decision skills.

We’ll dive into:

- The GIST framework for defining and tracking meaningful Goals, Ideas, Steps, and Tasks

- Mental models like ICE scoring and the Confidence Meter to ruthlessly prioritize

- How design sprints provide a structured process to prototype and validate rapidly

- The business case for inclusive decision-making and how to bake it into your process

- Tips for creating a culture of experimentation and learning within your organization.

Whether you’re a new product manager looking to build your decision-making muscles or a seasoned leader aiming to scale an evidence-based approach across your company, this will give you the tools and inspiration to make more informed, impactful choices.

Let’s dive in…

Start with clear goals and metrics

One of the most critical foundations of evidence-based decision-making is clearly defining success. What are you trying to achieve, and how will you know if you’ve achieved it? Without this clarity, it’s all too easy to get lost in a sea of competing priorities and pet projects.

As Itamar Gilad, internationally acclaimed author, speaker, and coach, emphasizes in his book Evidence-Guided, the first step is to align on your product Goals. These should be objective, measurable targets that reflect the value you aim to create for users and the business. Gilad recommends using the GIST framework to break this down:

- Goals: The high-level outcomes you’re driving towards, usually mirroring company OKRs

- Ideas: Hypotheses for features or improvements that could help achieve the Goals

- Steps: The actions needed to validate and implement the highest-potential Ideas

- Tasks: The granular units of work that make up each Step, managed in your project tracking tool of choice

“Goals are supposed to paint the end state, to define where we want to end up,” Gilad explains. “The evidence will not guide you unless you know where you want to go.”

A key part of the Goal-setting process is defining your North Star Metric – the single measure that best captures your product’s core value. For Google Search, it might be the number of searches completed. For Airbnb, it could be nights booked. For Netflix, hours watched.

Gibson Biddle, former product leader at Netflix and Chegg, notes that Netflix’s North Star evolved as the business grew. In the early DVD-by-mail days, it was the percentage of members who watched 4+ hours in the past 30 days. As streaming took off, it shifted to monthly viewing hours per member. With this in mind, know that your north star metric can and should change over time. But you should be confident enough about that metric so that it doesn’t fluctuate much in the short term.

Your North Star should clearly link to your business model and value exchange with customers. According to Gilad, the value exchange loop is basically the organization delivering as much value as it can to the market and capturing as much value back. Suppose you deliver massive value to users but do not see it reflected in your revenue or engagement metrics. In that case, you may need to re-examine your North Star or monetization approach.

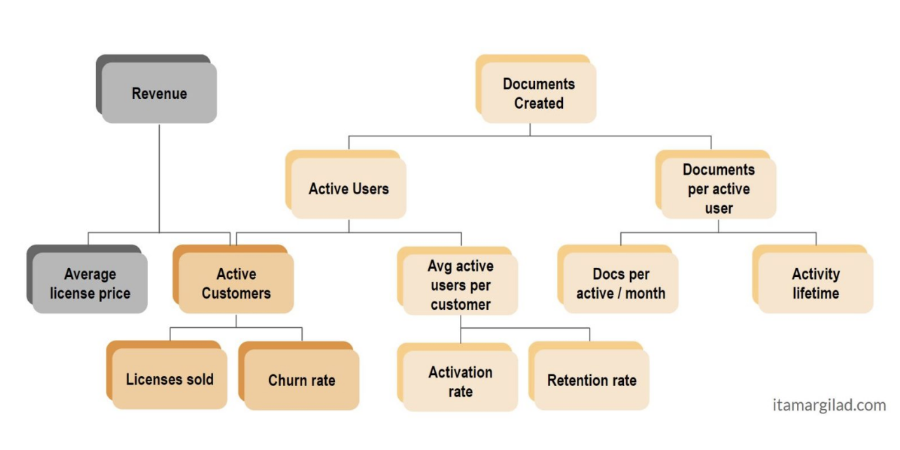

Gilad recommends building a metrics tree to connect the dots between your North Star and your team’s day-to-day work. Start with your top-line Goal, then break it down into the key inputs or drivers. For each driver, identify the sub-metrics that ladder up to it. Keep breaking it down until you get to measures that a single team or squad can directly influence.

Gilad shares an example metrics tree in this post, which I’ll reference below:

With this tree in place, each team can see how their slice of work contributes to the big picture. Product managers can use the tree to align their roadmaps and resourcing. Analysts and data scientists can monitor the key drivers and proactively identify issues and opportunities. Leaders can rally everyone around a shared definition of success.

So before you dive into generating Ideas and building your roadmap, take the time to get crisp on your Goals and metrics. Co-create them with your cross-functional stakeholders to ensure buy-in. Pressure-test them to ensure they’re realistic and achievable. And revisit them regularly as you gather data and market feedback.

Evaluate ideas objectively

Once you’ve aligned on your Goals and metrics, the real fun begins—generating and prioritizing Ideas to achieve them. This is where many teams fall into common decision-making traps. The loudest voice in the room drives the roadmap. Pet projects get pushed to the top without validation. Endless debate bogs down progress.

To avoid these pitfalls, it’s essential to establish a consistent, objective process for evaluating Ideas. One popular framework is the ICE scoring model, originally developed by Sean Ellis, founder of GrowthHackers. ICE stands for:

- Impact: How much will this Idea move the needle on our key metrics if it works?

- Confidence: How strong is our evidence that this Idea will have the desired impact?

- Ease: How much effort will it take to implement this Idea?

In his book Evidence-Guided, Gilad explains that the Confidence dimension is especially critical for making evidence-based decisions. Estimating potential Impact and Ease is not enough. We also need to assess the strength of our evidence and assumptions honestly.

Gilad created the Confidence Meter to help teams gauge confidence more rigorously. It’s a scale from 0-10, grouped into buckets:

- 0-4: Making claims based on opinions, beliefs, or third-party data. Confidence is low.

- 5-7: Light experimentation and user research to pressure-test thinking. Confidence is medium.

- 8-10: Direct data from experiments, prototypes, and live products. Confidence is high.

The Confidence Meter is meant to help you realize when there’s strong evidence to decide how much to invest in that idea. For example, let’s say your team is debating two Ideas for improving onboarding completion rates. Idea A came from a stakeholder convinced it’s a silver bullet, but you have no supporting data. Idea B emerged from user interviews and a prototype test showing a 10% lift in completion. The Confidence Meter would score Idea A quite low, while Idea B would rank much higher.

Focusing on user outcomes, not just features, is another key aspect of objective Idea evaluation. It’s easy to love a clever UX enhancement or technical improvement. But it may not be worth the effort if it doesn’t ultimately change user behavior in a way that drives business results.

As Richard White, Founder and CEO of Fathom and former CEO of UserVoice, puts it in a fireside chat that I conducted with him in the past: “It’s our job as a product team to translate feature requests into a deep understanding of the user’s underlying needs and desired outcomes. Ideas are great, but we can’t just plow ahead without validating the assumptions behind them.”

White recommends taking a step back to frame each Idea in terms of the user and business outcomes it will produce. What problem does it solve for the user? How does solving that problem drive value for the business? Leverage your metrics tree to draw a clear line from each Idea to your North Star.

When evaluating a set of Ideas, compare them based on their potential to drive outcomes, not just their surface characteristics. A simple heuristic is to score each Idea on a 1-5 scale for user value and business value, then plot them on a 2×2 matrix. The Ideas in the upper right quadrant (high user value, high business value) are your top candidates for further validation and implementation.

Of course, applying these frameworks doesn’t mean losing sight of vision and intuition. Gilad reminds us that you can’t simply shut down the founder or CEO’s ideas. It’s about looking at them critically and helping evolve them based on evidence.

Test Assumptions and Reduce Risk

Generating and prioritizing a strong set of Ideas is a big step forward. But even the most promising Ideas are assumptions until we test them with real users. Laura Klein, Author of Build Better Products and UX for Lean Startups, pointed out in a fireside chat that I conducted with her that you can have all the faith in the world in your Idea, but in the end, the only votes that count come from your customers and their behavior.

Many teams stumble here. We’re often so eager to ship that we rush into building without properly vetting our assumptions.

In the GIST framework, this means moving from Ideas to Steps. Steps are all about validating your Ideas and assumptions so you can have the confidence to build the right thing. But validation doesn’t have to mean building the entire solution. There are many lightweight ways to test ideas before committing to a full build.

Gilad lays out a spectrum of validation methods in his Confidence Meter, ranging from low-cost, low-fidelity techniques to more involved, higher-fidelity approaches:

- Interviews and surveys to understand user needs and pain points

- Demand tests and fake door tests to gauge interest in a value prop

- User story mapping and design sketches to align with the UX flow

- Clickable prototypes to test information architecture and interactions

- Live-data prototypes and Wizard of Oz tests to simulate the full experience

- Crowd-funded MVP to assess demand and willingness to pay

The key is aligning your validation approach with the confidence level you need to move forward. Gilad suggests that, early on, speed and learning should be favored. Invest more in higher-fidelity testing to refine the UX and technical feasibility as you gain confidence.

One of the most powerful tools for rapidly validating Ideas is the design sprint, popularized by Jake Knapp and the Google Ventures team. Design sprints compress months of ideation, prototyping, and testing into a focused, five-day process.

At INDUSTRY: The Product Conference in Dublin, Ireland – Jonathan Courtney, co-founder of AJ&Smart, explained that Design sprints can be a game-changer for aligning stakeholders, generating bold Solutions, and getting user feedback fast. As Courtney said, “It’s like fast-forwarding into the future to see how customers react before you invest all the time and money to build.”

AJ&Smart has run over 300 design sprints with companies like Google, Adidas, LEGO, and the United Nations. For enterprise-scale, they’ve evolved the model into a four-week process:

- Prep week: Align on the problem space, gather existing research and data

- Design sprint week: Explore the problem, sketch Solutions, build a prototype

- Iteration week: Refine the prototype based on user feedback and technical constraints

- Handoff week: Finalize specs for the MVP build and plan the next steps

“By the end of week four, you should have the confidence to say go or no-go on investing serious resources to build,” says Courtney. “You’ve gotten feedback from real users, not just your team and stakeholders. You’ve aligned everyone on what you’re building and why. That’s incredibly valuable.”

Of course, design sprints aren’t the only way to inject user feedback and experimentation into the product development process. The key is making it a regular habit, not a one-off event.

Klein emphasizes the need to build feedback loops into every stage of product development. This could be weekly user interviews, regular usability testing, or an experimentation program. The more you expose your Ideas to users, the faster you’ll learn and the more confidence you’ll have in your product decisions.

Make Inclusive Decisions for Global Impact

In today’s interconnected world, building products that resonate across diverse audiences is not just a nice-to-have – it’s a critical success factor. Laura Teclemariam, Director of Product Management at LinkedIn and formerly of Netflix, gave a talk at the New York Product Conference in the past and emphasized that retention is about engaging existing customers and welcoming new audiences. And the way to do that is to be authentic in your product decisions.

To operationalize this value, Netflix developed a framework that Teclemariam calls “Inclusive Lens.” It has three key pillars:

- Be Authentic: Ground your product decisions in genuine insights about your diverse customer base. Avoid stereotypes and tokenism.

- Be Inclusive: Ensure diverse representation on your teams and in your user research. Amplify underrepresented voices in the decision-making process.

- Be Aware: Continuously educate yourself about your global user base’s cultural context and lived experiences. Anticipate and address potential barriers to adoption.

Teclemariam shares several tactics for putting the Inclusive Lens framework into practice:

- Set clear representation goals for your product teams and user research participants. Aim to reflect the diversity of your current and future customer base.

- Provide training and resources to help team members recognize and mitigate their biases. Create space for ongoing dialog about inclusion topics.

- Partner with Employee Resource Groups (ERGs) and DE&I experts to pressure-test product Ideas and surface blind spots.

- Segment your user research and experimentation to understand the needs and behaviors of specific cultural and regional audiences.

- Prioritize Ideas that balance broad appeal with authentic representation. Evaluate potential concepts through an Inclusion Lens, not just business metrics.

- Design for global accessibility from the start. Consider language localization, culturally relevant payment methods, and mobile-first access.

Telcemariam shared that Netflix baked Inclusion Lens reviews into the product development process, making them a core part of how they defined product value and made trade-off decisions.

The results speak for themselves. Netflix’s international subscriptions have grown from 1.9 million in 2011 to over 120 million in 2022. The company’s commitment to authentic, inclusive storytelling has fueled hit original series in markets from South Korea to South Africa to Brazil. And its platform has become a powerful vehicle for amplifying underrepresented voices and perspectives worldwide.

However, as Teclemariam emphasizes, inclusive product decision-making is never done. It requires ongoing investment, humility, and a willingness to learn from missteps. The key is to keep listening, iterating, and challenging yourself to improve.

Create a Culture of Evidence-Based Decision Making

Adopting an evidence-based approach to product decisions is as much about mindset as it is about methods. You can have all the right frameworks and techniques, but if your culture still defaults to HiPPOs (Highest-Paid Person’s Opinion) and gut-based calls, you’ll struggle to make it stick.

Laura Klein emphasizes that evidence-based decision-making needs to be more than just a process. It needs to be a core value that everyone from the C-suite to the intern believes in and demonstrates daily.

So, how can product leaders create this kind of culture? It starts with modeling the behavior yourself. Whenever you’re faced with a decision, visibly reach for data rather than defaulting to your opinion. Ask your teams to see the evidence behind their recommendations. And be transparent about your own assumptions and learning process. If you want your team to be evidence-based, you must show them that you’re willing to change your mind based on data. You need to celebrate the times when you were wrong, not just the times when you were right.

This can be uncomfortable, especially for leaders who are used to being seen as the expert with all the answers. But it’s essential for fostering a culture of learning and continuous improvement. I was reminded of this point during my fireside chat with Richard White…

“The best product leaders I’ve worked with are the ones who are constantly asking questions and seeking to understand,” says White. “They don’t pretend to have all the answers. They create space for their teams to experiment, fail, and learn. And they celebrate those learnings, even when they disprove their own pet hypotheses.”

One way to make this mindset shift concrete is to change how you talk about success and failure. Instead of just celebrating shipped features and meeting deadlines, celebrate the learnings and de-risking that happened along the way.

Itamar Gilad recommends starting each product review or retrospective with the question, “What did we learn?” rather than “What did we ship?” This simple reframe can greatly impact how teams approach their work. When you make learning the primary measure of success, it becomes less about churning out features and more about understanding your users better, validating your assumptions, and making smarter bets.

Of course, this doesn’t mean you should celebrate failure for failure’s sake or tolerate sloppy execution. But it does mean recognizing that not all failures are created equal. There’s a big difference between a failure that comes from a well-designed experiment or prototype and one that comes from neglecting to test your assumptions at all.

Operationalize with the Right Processes

Creating a culture of evidence-based decision-making is a critical first step. But to truly reap the benefits, you need to operationalize it with the right processes and rituals. This means evolving your product development approach as your company grows and matures.

The optimal product process looks very different at different stages of a company’s lifecycle. In the early startup days, speed and experimentation are paramount. As you grow and scale, more rigor and predictability become important. And as you expand globally, inclusivity and localization take center stage.

The key is regularly assessing your product processes and adapting them to your current context. Are you still making decisions like you did when you were a scrappy startup? If so, you may need to introduce structure and discipline to align a larger team. Are you expanding into new markets or customer segments? If so, you may need to update your research and testing processes to ensure you’re capturing diverse perspectives.

For AJ & Smart, Design Springs became a game-changer for aligning stakeholders, generating bold ideas, and validating them quickly with users. However, Design Springs is not a silver bullet and may not be the right ritual for your organization. But, it’s one example of how an organization should find a ritual or set of rituals that work for them and capitalize on it.

Courtney recommends using a “Lighthouse” model for those who want to try Design Sprints. Instead of trying to impose design sprints top-down across the whole organization, start with a single team working on a high-priority project. Give them the training and resources to run a full four-week design sprint, including a prep week, a core sprint week, an iteration week, and a handoff week. Then, let the results speak for themselves. If the first sprint goes well, it will help show its value to the rest of the organization, and you can start expanding to other teams.

Another key aspect of operationalizing evidence-based decision-making is to build in explicit buffers and iteration cycles rather than defaulting to hard-and-fast deadlines. As Klein contends, the goal of product development shouldn’t be to ship on a specific date. It should be to ship the right products and features that solve your users’ needs and drive your business goals.

Gilad offers a simple rule of thumb: For every week you spend building, spend at least one day testing with users. For every month you spend building, spend at least one week iterating based on user feedback. And for every quarter you spend building, spend at least one sprint validating your assumptions and refining your roadmap.

By operationalizing these evidence-based processes, you can create a virtuous cycle of continuous improvement. You’ll make better decisions faster. You’ll deliver more value to your users more efficiently. And you’ll create a more engaged and empowered product team that’s always learning and adapting based on the best available evidence.

Summing it all up

Making better product decisions is both a mindset and a capability that must be continuously nurtured and operationalized. It requires letting go of the outdated notion that we can rely on intuition alone. And we can’t simply let HiPPO-driven decisions drive our roadmaps. Being more evidence-guided demands that we become masters of customer insights, rigorous experimentation, and inclusive decision-making processes. The path is not always easy, but the payoff is immense – products that truly resonate with our customers, teams aligned around a shared definition of success, and a culture of continuous learning.

So I’ll finish this with a challenge…

Whether you’re a fresh-faced Product Manager or a seasoned Product Leader, let this essay be your “nudge” to take action. Adopt lightweight validation methods. Push for inclusive representation. Celebrate learnings, not just launches. By making evidence-based decisions, a core value, and operational practice, you can confidently navigate uncertainty and build products that create meaningful value for your users and business.